The hope within the Warriors is that the core of Klay Thompson, Draymond Green and Stephen Curry can remain intact despite missing the playoffs for the third time in five seasons. The post Kerr: 'Lot of value' in keeping Dubs' core together appeared first on Buy It At A Bargain – Deals And Reviews.

Tag: Core

QuestDB (YC S20) Is Hiring a Core Database Engineer (Low Latency Java and C++)

Article URL: https://questdb.io/careers/core-database-engineer/

Comments URL: https://news.ycombinator.com/item?id=33356744

Points: 1

# Comments: 0

How to Adapt Your SEO to Google’s Core Web Vitals and Core Update [FREE WEBINAR TODAY]

Confused about Google’s core web vitals update? Not sure what it means for your SEO? Join my free live webinar on June 29th at 8 a.m. PST to learn more. I’ll cover what core web vitals are, why they matter, and what changes you need to make to your website.

Sign up for the core web vitals webinar free here.

When Google updates roll out, there are usually a few people who think that SEO is dead and the sky is falling.

The good news about the core web vitals update (and the core update) is the sky isn’t falling. However, there are a few changes you’ll want to make.

What Are Google’s Core Web Vitals?

If this is the first you’re hearing about the core web vitals update, here’s a quick rundown:

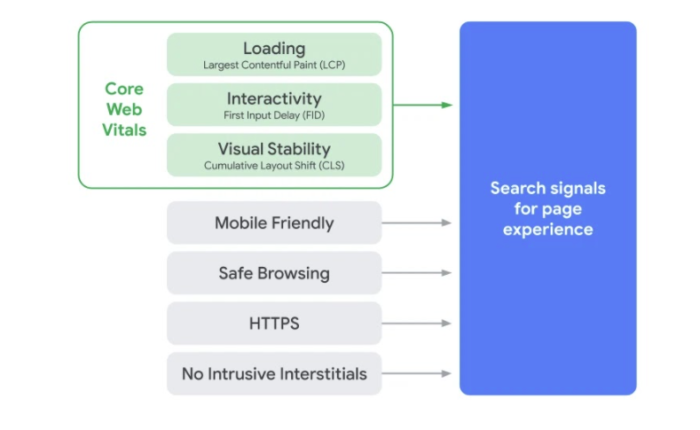

In May 2020, Google announced user experience would become part of their ranking criteria. The factors they’re looking at include:

- Mobile-friendly: Sites should be optimized for mobile browsing.

- Safe-browsing: Sites should not contain misleading content or malicious software.

- HTTPS: The page is served in HTTPS.

- No intrusives: No popups or other features that block the main content.

- Core Web Vitals: Fast load times with elements of interactivity and visual stability.

The goal, according to Google, is to deliver a better user experience, which is crucial to the long-term success of websites.

There’s a good chance you’re already doing most of this. However, the core web vitals gets a bit more complicated than just improving page speed. It also looks at things like the largest contentful paint, the first input, and cumulative layout shift.

These sound complex, but they aren’t.

These features look at how long it takes for your page to start displaying the most important elements, how quickly your site responds to user interactions, and how often layout shifts impact the user experience.

Essentially, Google wants to reward sites that are easy for users to use — which is nothing new. How Google decides which sites are easier to use has changed slightly, which is why marketers are paying attention.

What You’ll Learn in the Google’s Core Web Vitals and Core Update Webinar on June 29th

In this webinar, I’ll cover what you need to know about Google’s core web vitals and the core update, including:

- What core web vitals are and how to prepare your site for the upcoming changes.

- Why most users leave your website in just seconds, and what to do about it.

- Methods that SEO agencies will never tell you because they’d rather sell their own solutions.

- Three simple tweaks you can make to your site that can boost your sales by 300 percent or more.

Sign Up Now: Core Web Vitals Live Webinar

I’m really excited to talk about this topic and what it means for the future of SEO. I hope you’ll join me at 8 a.m. PST. Remember, it’s free!

How Core Web Vitals Affect Google’s Algorithms

While we spend a lot of time focusing on keyword optimization, mobile-experience, and backlinks, Google pays a lot of attention to the on-page experience. That’s why they’ve rolled out a new set of signals called Core Web Vitals.

These signals will take into account a website’s page loading speed, responsiveness, and visual stability.

In this guide, I’ll explain what Core Web Vitals are and help you figure out how it could impact your rankings.

Core Web Vitals: What Are They and Why Should You Care?

Is this simply another scare tactic by Google to make us revamp everything and get all nervous for a few months?

I don’t think it is; I think this will become a serious ranking factor in the coming years — and for a good reason.

The good news is you may not even have to do anything differently because you’re already providing a high-quality on-page experience for your visitors.

This is essentially what Core Web Vitals are. It’s a page experience metric from Google to determine what type of experience visitors get when they land on your page.

For example, Google will determine if your page is loading fast enough to prevent people from bouncing. If it’s not, you could face a penalty in ranking and be replaced by a website that’s loading correctly.

So, now we have the following factors determining the quality of a “page experience” on Google:

- Mobile-friendly: The page is optimized for mobile browsing.

- Safe-browsing: The page doesn’t contain any misleading content or malicious software.

- HTTPS: You’re serving the page in HTTPS.

- No intrusives: The page doesn’t contain any issues that cover the primary content.

- Core Web Vitals: The page loads quickly and focuses on elements of interactivity and visual stability.

Many websites are providing these factors already, and if you’re one of them, you have nothing to worry about.

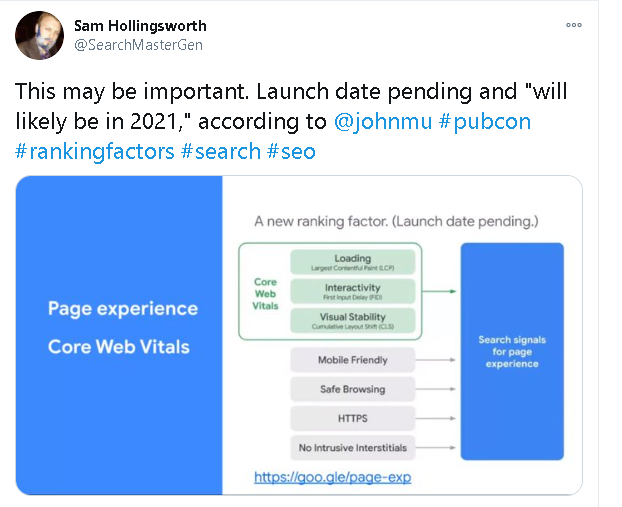

Google’s Announcement about Core Web Vitals becoming a Ranking Factor

I took a look at Google’s press release to see if there was anything that stood out. Google announced that over time, they’d added factors such as page loading speed and mobile-friendliness, but they want to drive home the importance of on-page experience.

They’re looking at upcoming search ranking changes that factor in-page experience. Google says they’ll incorporate these page experience metrics for the “Top Stories” feature on mobile and remove the AMP requirement.

Google also says they’ll provide a full six months notice before rolling this out, so it does look like we have some time to think about it and get ourselves on track.

Core Web Vitals Metrics

As a website owner, developer, or builder, you consider a million different factors when putting together your website.

If you’re currently working on new sites or making updates to existing ones, you’ll want to keep these three factors in mind going forward.

Loading: Largest Contentful Paint (LCP)

Largest Contentful Paint or LCP refers to your page loading performance. It covers the perceived loading speed, which means:

How long does it take for your website to start displaying elements that are important to the user?

Do you see how this differs from regular page loading speed now?

There’s a huge difference here.

For example, it’s common practice to keep the most important information and eye-catching content above the fold, right?

Well, that’s no use to anyone if it takes all the interesting “above the fold” six seconds to load.

We see this all the time when sites have images or videos above the fold. They generally take up a lot of space and contain important pieces of context for the rest of the content, but they’re the last to load, so it leaves a large white space at the top of the screen.

Google is paying attention to this because they realize it’s causing a lot of people to bounce.

The general benchmark for Google is 2.5 seconds. This means that your website should display everything in the first frame (above the fold) in 2.5 seconds.

Keep in mind that webpages are displayed in stages. So when the final elements of the top of your page loads, that would be your LCP. A slow LCP = lower rankings and penalties and a fast LCP = higher rankings; it’s as simple as that.

Interactivity: First Input Delay (FID)

The First Input Delay or FID is the responsiveness of your webpage. This metric measures the time between a user’s first interaction with the page and when the browser can respond to that interaction.

This web vital might sound a little complex, so let’s break it down.

Let’s say you’re filling out a form on a website to request more information about a product. You fill out the form and click submit. How long does it take for the website to begin processing that request?

That’s your First Input Delay. It’s the delay in between a user taking action and the website actually moving on that action.

It’s essentially a measure of frustration for the user. How many times have angrily hit a submit button over and over because it’s taking forever?

This is a huge UX metric because it can also be the difference between capturing a lead or a sale.

Chances are, someone is taking action because they’re interested in whatever it is you’re offering. The last thing you want to do is lose them at the finish line.

Visual Stability: Cumulative Layout Shift (CLS)

Cumulative Layout Shift refers to the frequency of unexpected layout changes and a web page’s overall visual stability.

This one is straightforward, and I have a perfect example.

Have you ever scrolled through a website, saw something interesting, went to click on it, but right at the last second, a button loads, and you end up clicking that instead?

Now you have to go back and find what you were looking for again and (hopefully) click the right link.

Or, where you’re reading a paragraph, and buttons, ads, and videos keep loading as you’re reading, which keeps bumping the paragraph down the page, so you have to keep scrolling to read it.

These are signs of a poor on-page experience, and Google is factoring these issues as they strive to provide the best experience for users.

Going forward, the focus is on mimicking an “in-person” experience online. As more and more stores shut down and e-commerce continues to boom, it’s up to store/site owners to provide that in-store experience to their users.

For CLS, the goal is to have a score as close to zero as possible. The less intrusive and frustrating page changes, the better.

The Effects of Core Web Vitals on Content Strategy and Web Development

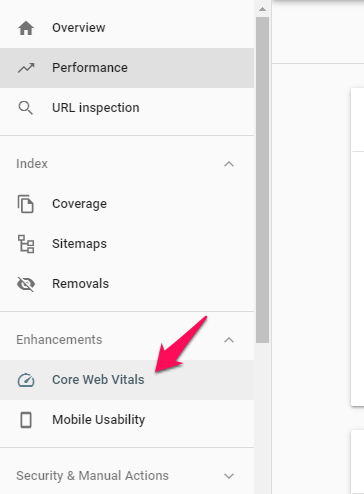

Now let’s talk about how to improve core web vitals and where you can get this information.

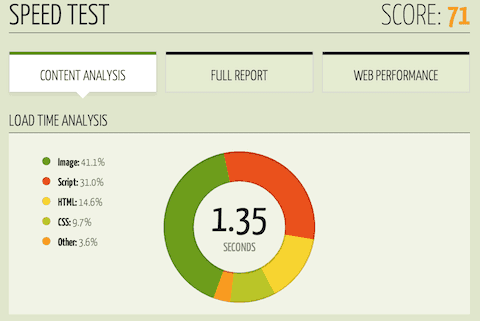

Head to your Google Search Console, where you’ll see the speed test was replaced with “Core Web Vitals.”

When you click it, it’ll bring up a report for mobile and one for desktop.

You’ll see a list of poor URLs, URLs that need improvement, and good URLs.

Remember that Google is factoring in the three things we discussed previously to determine the URL’s quality.

So, if you have many poor URLs, it means that they’re slow to display the most critical content, slow to process actions, and continually offer a poor experience by shifting layout too frequently.

If the URL “needs improvement,” it may have a slight combination of two or three of these. A good URL checks out clean.

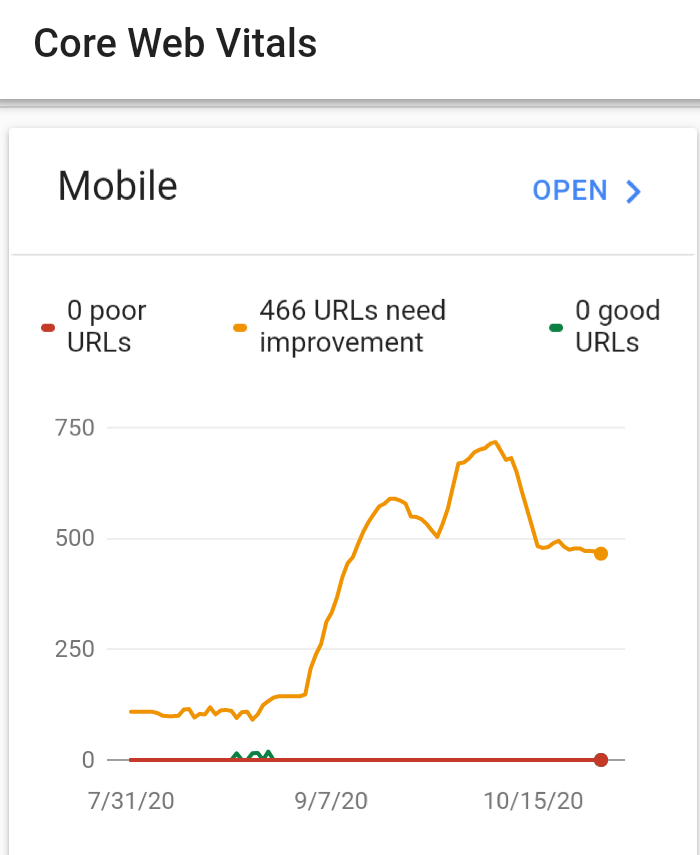

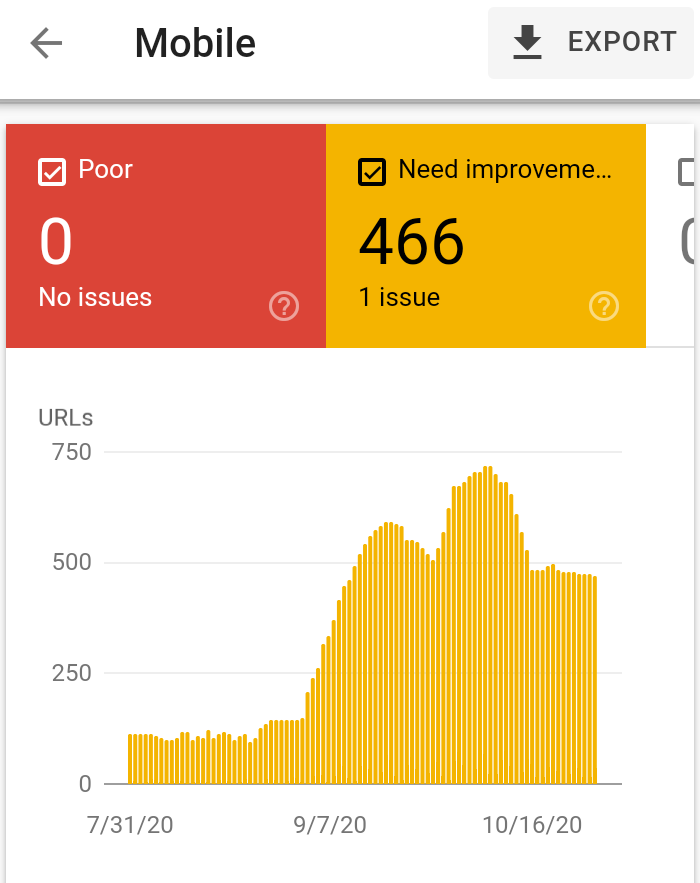

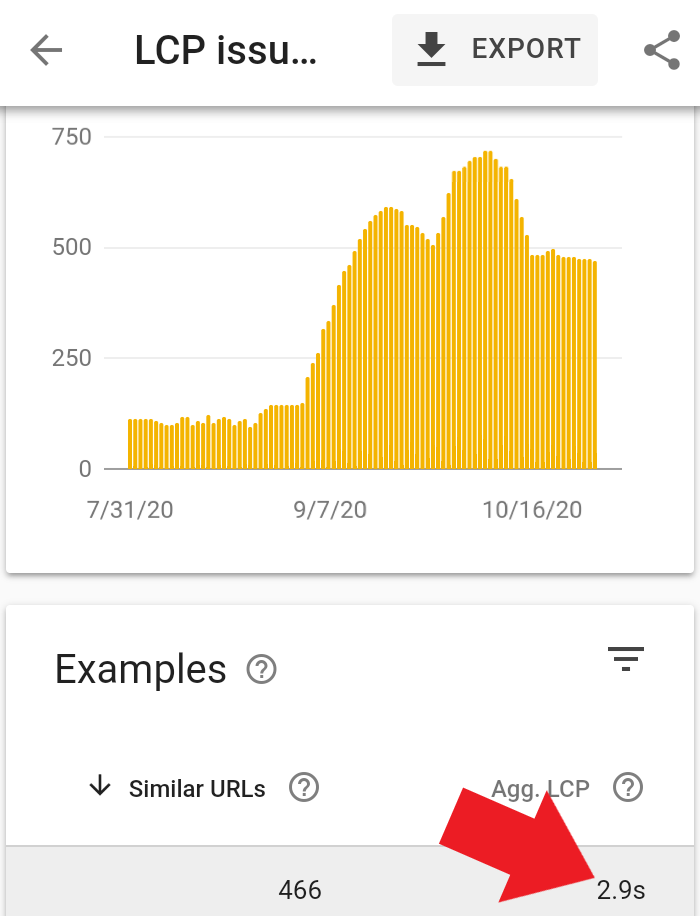

If you open the report on mobile, for example, you’ll see a page that might look like this.

It’s an example of a website that needs improvement, and the issue here is LCP or page loading performance.

The goal is 2.5 seconds on mobile, and this URL has an average LCP of 2.9 seconds, so this shows clear room for improvement.

If we hop over to the desktop report, here are some examples of poor URLs.

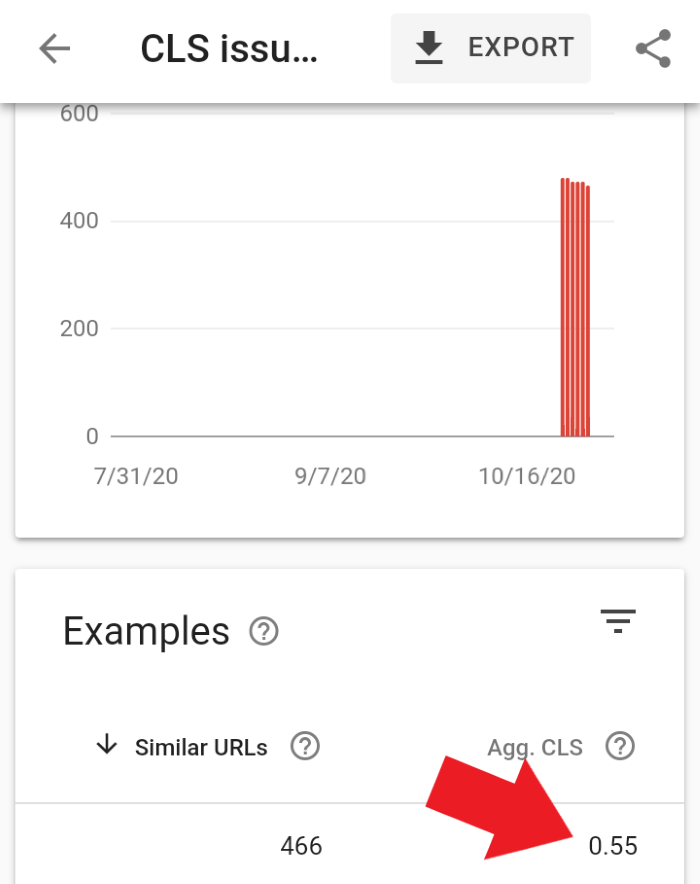

This one has a CLS issue, which means that the website is loading in a way that changes the site’s physical structure too often.

The goal for this is 0.25, and this webpage has a CLS of 0.55. It also says that 472 URLs are affected by this same issue, so this website owner has a lot of work ahead of them to get this fixed.

I’m a big fan of these reports’ transparency because Google makes it easy to locate the problem and fix it.

You can even click the “validate” button when you think you’ve fixed the problem, and Google will verify your progress and update the report.

How to Track Your Website’s Core Web Vitals

Tracking your Core Vitals is as simple as going into the search console and looking at each web property on a case-to-case basis. You’ll want to go in and play around with this to see where you stand.

How to Improve Core Web Vitals

Once you’ve pulled your report, it’s time to make some changes.

You’ll be able to improve the LCP by limiting the amount of content you display at the top of the web page to the most critical information. If it’s not critically important to a problem that the visitor is trying to solve, move it down the page.

Improving FID is simple, and there are four primary issues you’ll want to address:

- Reduce third-party code impact: If you have a bunch of different processes happening simultaneously, it will take longer for the action to start working.

- Reduce JavaScript execution time: Only send the code your users need and remove anything unnecessary.

- Minimize main thread work: The main thread does most of the work, so you need to cut the complexity of your style and layouts if you have this issue.

- Keep request counts low, and transfer sizes small: Make sure you’re not trying to transfer huge files.

Improving CLS requires paying attention to size attributes and video elements on all media. When you allow the correct amount of space for a piece of content before it loads, you shouldn’t experience any page shifts during the process.

It also helps to limit transform animations because many of them will trigger layout changes, whether you want them to or not.

Conclusion

Core Web Vitals and SEO go hand-in-hand, and we all know that we can’t ignore any single ranking factor if we want to beat out our competition and keep our rankings.

Do we know exactly how much of an impact core web vitals have on our ranks? No, we don’t. But Google is paying a lot more attention to the on-page experience.

Is your website following best practices for Core Web Vitals? Let us know!

The post How Core Web Vitals Affect Google’s Algorithms appeared first on Neil Patel.

Google’s May 2020 Core Update: What You Need to Know

On May 4th, Google started to roll out a major update to its algorithm. They call it a “core” update because it’s a large change to their algorithm, which means it impacts a lot of sites. To give you an idea of how big the update is, just look at the image above. It’s from …

Google’s May 2020 Core Update: What You Need to Know

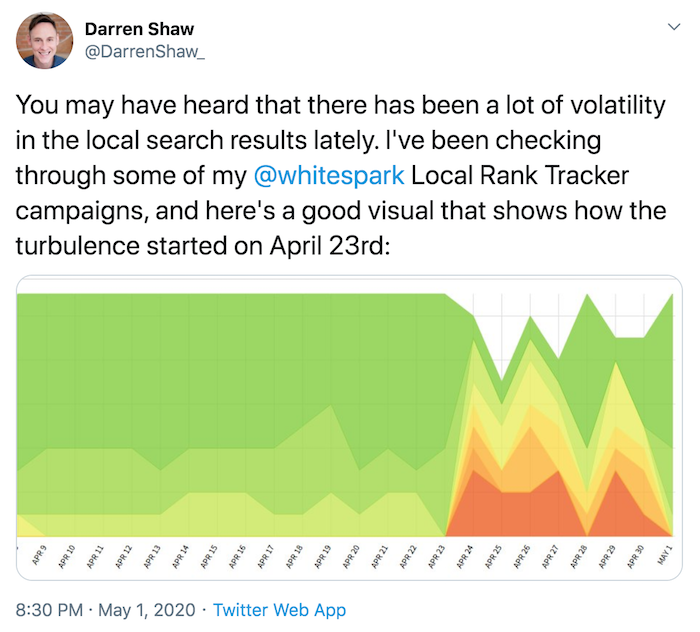

On May 4th, Google started to roll out a major update to its algorithm. They call it a “core” update because it’s a large change to their algorithm, which means it impacts a lot of sites.

To give you an idea of how big the update is, just look at the image above. It’s from SEMrush Sensor, which monitors the movement of results on Google.

The chart tracks Google on a daily basis and when it shows green or blue for the day, it means there isn’t much movement going on. But when things turn red, it means there is volatility in the rankings.

Now the real question is, what happened to your traffic?

If you already haven’t, you should go and check your rankings to see if they have gone up or down. If you aren’t tracking your rankings, you can set up a project on Ubersuggest for free and track up to 25 keywords.

You should also log into your Google Analytics account and check to see what’s happening to your traffic.

Hopefully, your traffic has gone up. If it hasn’t, don’t panic. I have some information that will help you out.

Let’s first start off by going over the industries that have been most impacted…

So what industries were affected?

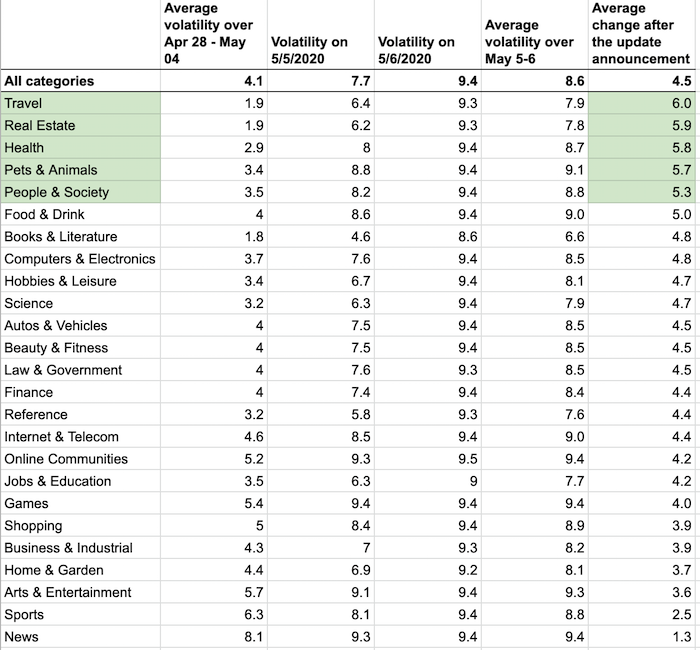

Here are the industries that got affected.

As you can see, travel, real estate, health, pets & animals, and people & society saw the biggest fluctuations with rankings.

Other industries were also affected… the ones at the bottom of the list were the least affected, such as “news.”

There was also a shakeup in local SEO results, but that started before the core update.

One big misconception that I hear from people new to SEO is that if you have a high domain authority or domain score (if you aren’t sure what yours is, go here and put in your URL), you’ll continually get more traffic and won’t be affected by updates. That is false.

To give you an idea, here are some well-known sites that saw their rankings dip according to our index at Ubersuggest:

- Spotify.com

- Creditkarma.com

- LinkedIn.com

- Legoland.com

- Nypost.com

- Ny.gov

- Burlington.com

More importantly, we saw some trends on sites that got affected versus ones that didn’t.

Update your content frequently

I publish 4 articles a month on this blog. Pretty early every Tuesday like clockwork, I publish a new post.

But do you know how often I update my old content?

Take a guess?

Technically, I don’t update my own content, but I have 3 people who work for me and all they do is go through old blog posts and update them.

On any given month, my team updates at least 90 articles. And when I say update, I am not talking about just adjusting a sentence or adding an image. I am talking about adding a handful of new paragraphs, deleting irrelevant information, and sometimes even re-writing entire articles.

They do whatever it takes to keep articles up to date and valuable for the readers. Just like how Wikipedia is constantly updating its content.

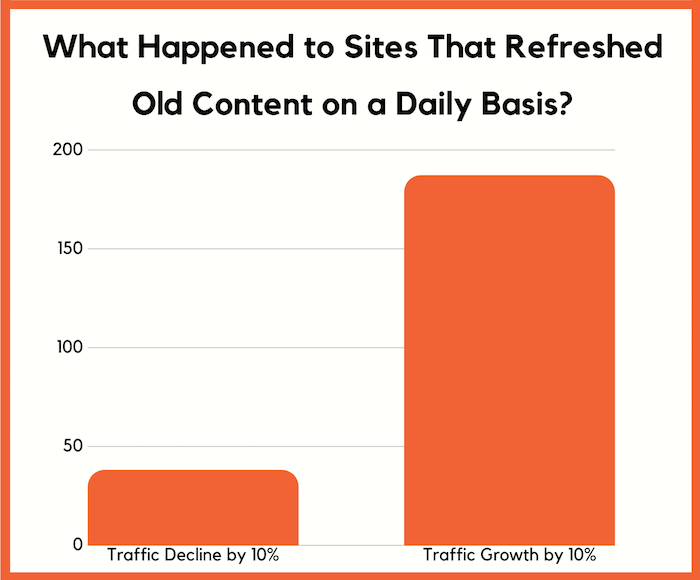

Here’s an interesting stat for you: We know for certain that 641 sites that we are tracking are updating old content on a daily basis.

Can you guess how many of them saw a search traffic dip of 10% or more?

Only 38! That’s 5.92%, which is extremely low.

What’s crazy, though, is that 187 sites saw an increase in their search traffic of 10% or more.

One thing to note is when we are calculating organic search traffic estimates, we look at the average monthly volume of a keyword as well as click-through rates based on ranking. So holidays such as May 1, which is Labor Day for most of the world, didn’t skew the results.

Now, to clarify, I am not talking about producing new content on a daily or even weekly basis. These sites are doing what I do on NeilPatel.com… they are constantly updating their old content.

Again, there is no “rubric” on how to update your old content as it varies per article, but the key is to do whatever it takes to keep it relevant for your readers and ensure that it is better than the competition.

If you still want some guidance on updating old content, here is what I tell my team:

- If the content is no longer relevant to a reader, either delete the page and 301 redirect it to the most relevant URL on the site or update it to make it relevant.

- Are there ways to make the content more actionable and useful? Such as, would adding infographics, step-by-step instructions, or videos to the article make it more useful? If so, add them.

- Check to see if there are any dead links and fix them. Dead links create a poor user experience.

- If the article is a translated article (I have a big global audience), make sure the images and videos make sense to anyone reading the content in that language.

- Look to see the 5 main terms each article ranks for and then Google those terms. What do the pages ranking in the top 10 do really well that we aren’t?

- Can you make the article simpler? Remove fluff and avoid using complex words that very few people can understand.

- Does the article discuss a specific year or time frame? If possible, make the article evergreen by avoiding the usage of dates or specific time ranges.

- If the article covers a specific problem people are facing, make sure you look at Quora first before updating the article. Look to see popular answers on Quora as it will give you a sense of what people are ideally looking for.

- Is this article a duplicate? Not from a wording perspective, but are you pretty much covering the exact same concept as another article on your site. If so, consider merging them and 301 redirect one URL to the other.

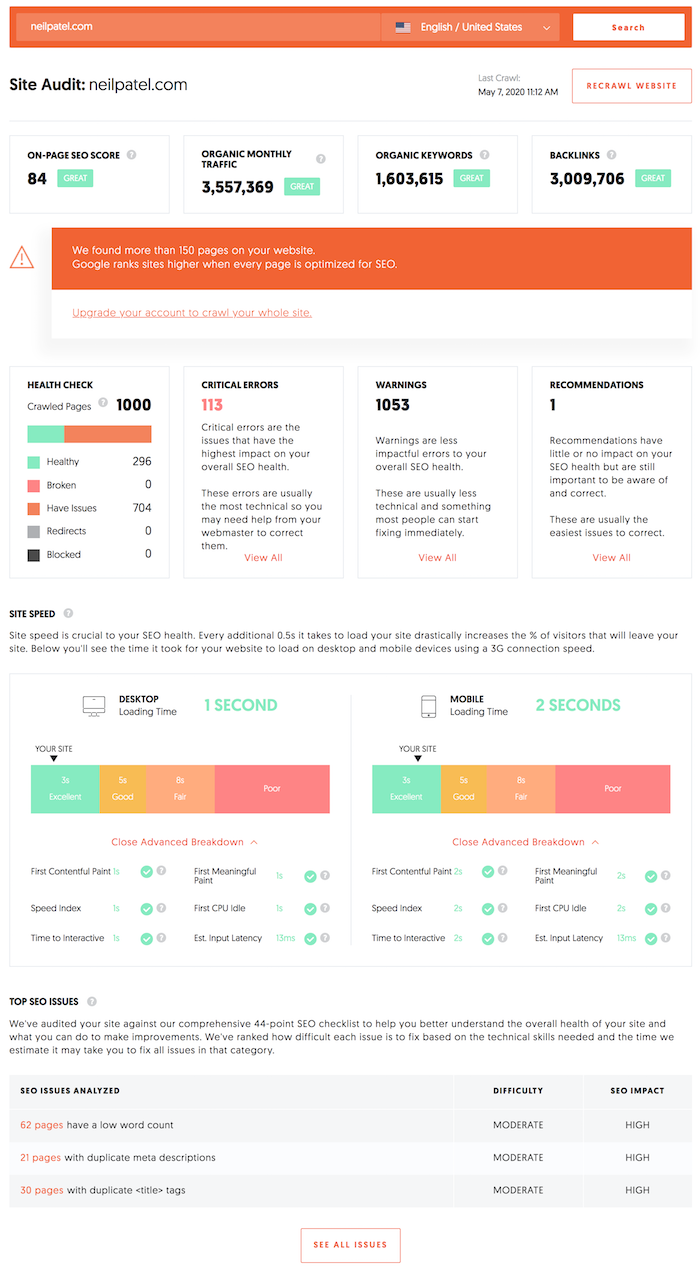

Fix your thin content

Here’s another interesting stat for you. On average, Ubersuggest crawls 71 websites every minute. And when I mean crawl, users are putting in URLs to check for SEO errors.

One error that our system looks at is thin content (pages with low-word counts).

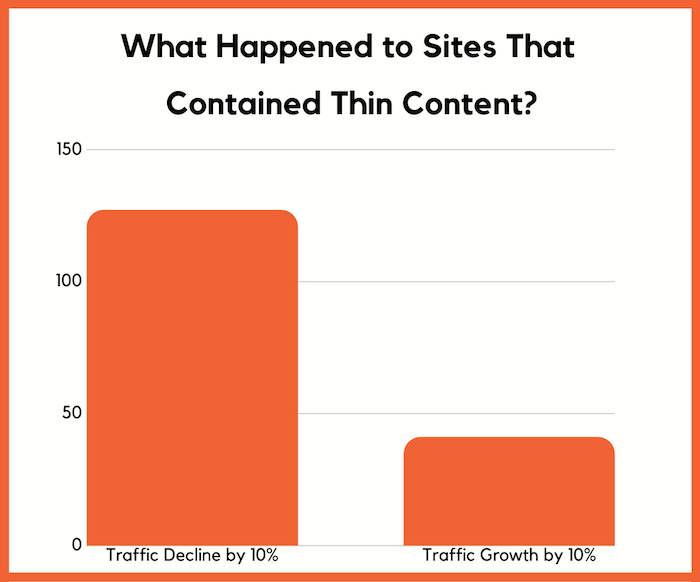

On average, 46% of the websites we analyze have at least one page that is thin in content. Can you guess how many of those sites got impacted by the latest algorithm update?

We don’t have enough data on all of the URLs as the majority of those sites get very little to no search traffic as they are either new sites or haven’t done much SEO.

But when we look at the last 400 sites in our system that were flagged with thin content warnings for pages other than their contact page, about page, or home page, and had at least 1,000 visitors a month from Google, they saw a massive shift in rankings.

127 of the sites saw a decrease in search traffic by at least 10% while 41 saw an increase in search traffic by at least 10%.

Sites with thin content saw a roughly 3 times higher likelihood of being affected in a negative way than a positive one. Of course, the majority of the sites with thin content saw little to no change at all, but still, a whopping 31.75% saw a decrease.

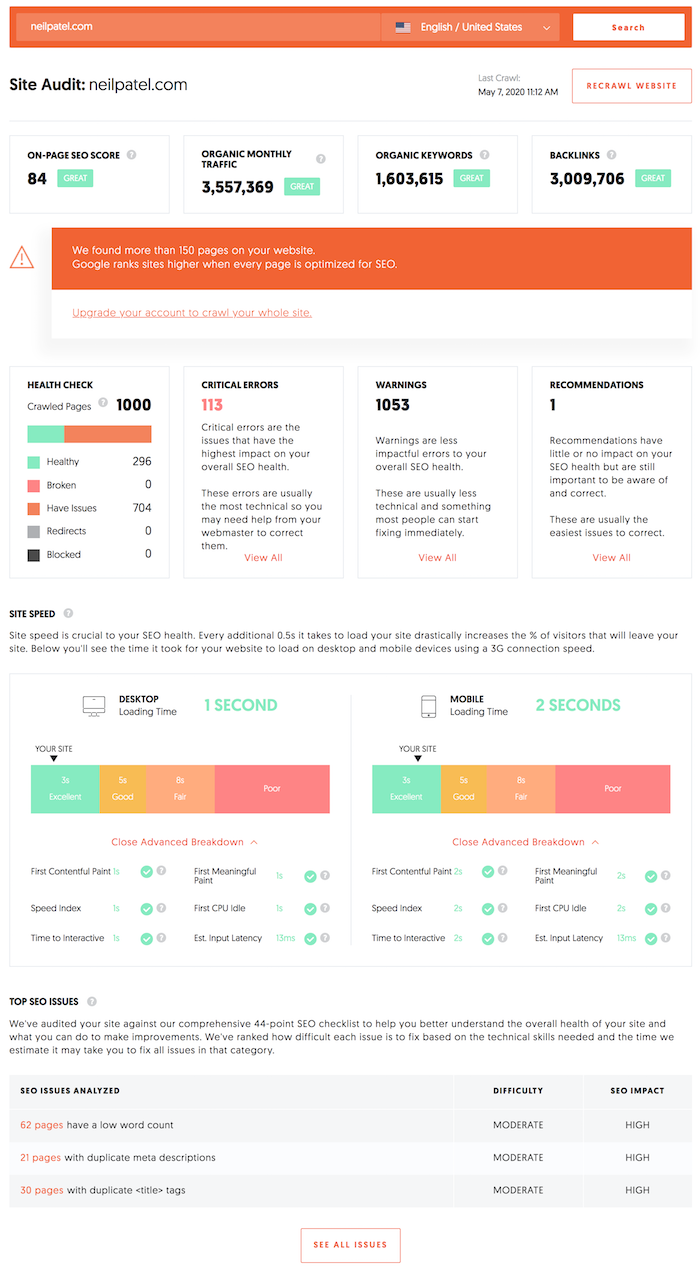

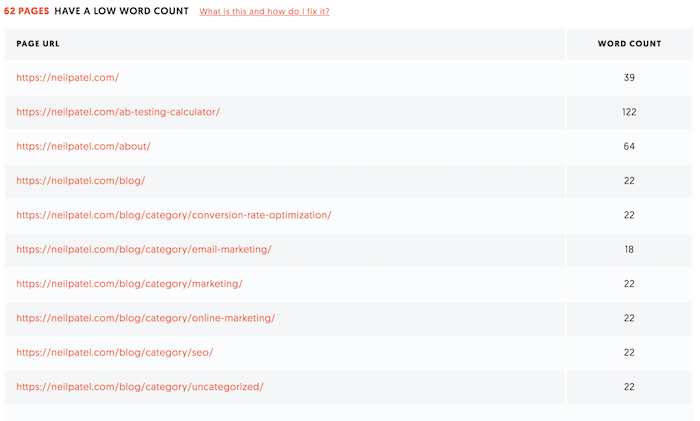

If you don’t know if you have thin content, go here and put in your URL.

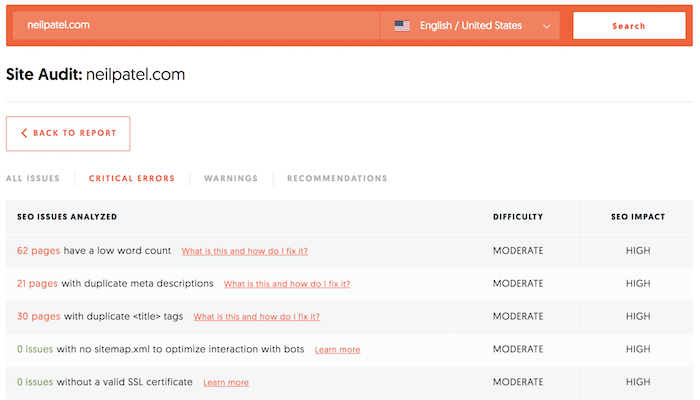

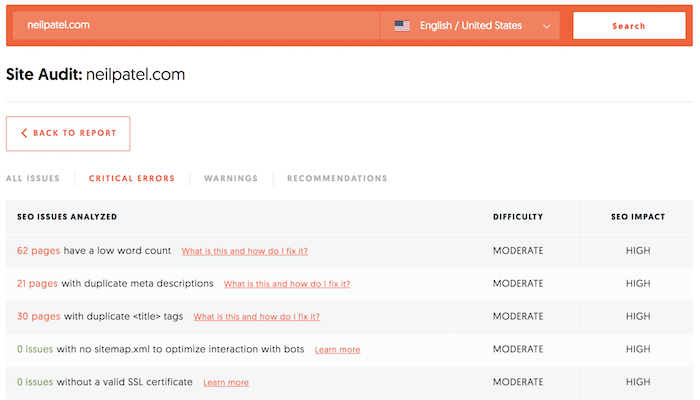

You’ll see a report that looks something like this:

I want you to click on the “Critical Errors” box.

You’ll now see a report that looks like:

Look to see if there are any “low word count” errors. If there is, click on the number and it will take you to a page that shows you all of the pages with a low word count.

You won’t be able to fix them all, as some pages like your contact page or category pages, which may not need thousands of words.

And in other cases, you may be able to get the point across to a website visitor in a few hundred words or even through images. An example would be if you have an article on how to tie a tie, you may not have too many words because it’s easier to show people how to do so through a video or a series of images.

But for the pages that should be more in-depth, you should fix them. Here are the three main questions to consider when fixing thin content pages:

- Do you really need to add more words – if you can get the message across in a few hundred words or through images or videos, it may be enough. Don’t add words when it isn’t needed. Think of the user experience instead. People would rather have the answer to their question in a few seconds than to wait minutes.

- How does your page compare to the competition – look at similar pages that are ranking on page 1. Do they have more content than you or less? This will give you an idea if you need to expand your page, especially if everyone who ranks on page 1 has at least a few thousand words on their page.

- Does it even make sense to keep the page – if it provides little to no value to a reader and you can’t make it better by updating it, you may want to consider deleting it and 301 redirect the URL to another similar page on your site.

Fix your SEO errors

Another interesting finding that we noticed when digging through our Ubersuggest data is that sites with more SEO errors got impacted greatly.

Now, this doesn’t mean that if you have a ton of SEO errors you can’t rank or you are going to get hit by an algorithm update.

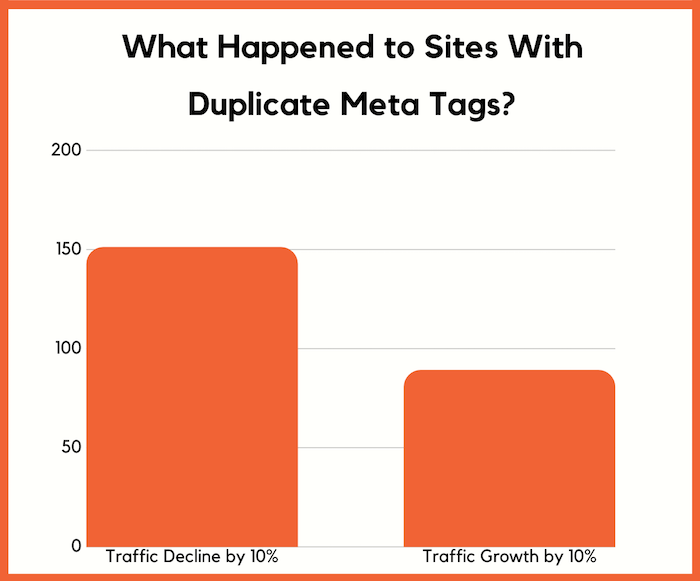

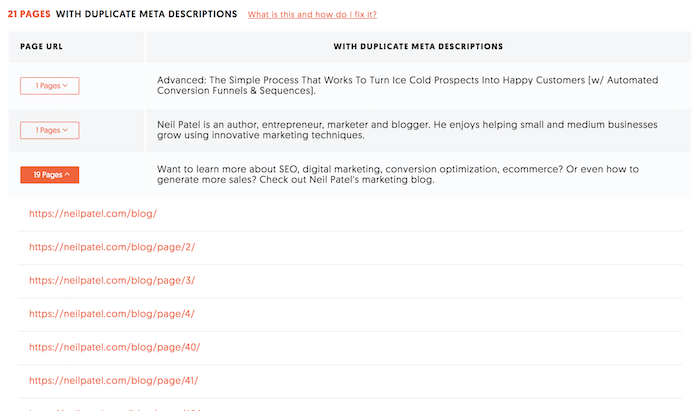

More so it was one type of error that hurt sites more than others. It was sites with duplicate title tags and meta descriptions.

One thing to note was that many sites have duplicate meta tags, but when a large portion of your pages have duplicate meta tags, it usually creates problems.

So we dug up sites that contained duplicate meta tags and title tags for 20% or more of their pages.

Most of these sites didn’t get much traffic in general, but for the 363 that we could dig up that generated at least 1,000 visits a month from Google, 151 saw a decrease in traffic by at least 10%.

89 of them also saw increases in traffic by 10% or more, but still, 41.59% of sites with duplicate meta tags saw a huge dip. If you have duplicate meta tags you should get this fix.

To double check if you do, put your URL in here again. It will load this report again:

And then click on the critical errors again. You’ll see a report that looks like this:

Look for any errors that say duplicate meta description or title tag. If you see it, click on the number and it will take you to a page that breaks down the duplicates.

Again, your site doesn’t have to be perfect and you’ll find in some cases that you have duplicates that don’t need to be fixed, such as category pages with pagination.

But in most cases, you should fix and avoid having duplicate meta description and title tags.

Conclusion

Even if you do everything I discussed above, there is no guarantee that you will be impacted by an algorithm update. Each one is different, and Google’s goal is to create the best experience for searchers.

If you look at the above issues, you’ll notice that fixing them should create a better user experience and that should always be your goal.

It isn’t about winning on Google. SEO is about providing a better experience than your competition. If that’s your core focus, in the long run, you’ll find that you’ll do better than your competition when it comes to algorithm updates.

So how was your traffic during the last update? Did it go up or down, or just stay flat?

The post Google’s May 2020 Core Update: What You Need to Know appeared first on Neil Patel.

SEO Updates: What You Need to Know About the June Core Algorithm Update

We’re trying out something new! Our SEO scouts have always been on the lookout for Google announcements and SEO updates. Before this, it was something we shared between the team, but then one day someone asked, why not share it with our audience and it clicked! That’s why we are kickstarting this new SEO updates …

How I Beat Google’s Core Update by Changing the Game

Google released a major update. They typically don’t announce their updates, but you know when they do, it is going to be big.

And that’s what happened with the most recent update that they announced.

A lot of people saw their traffic drop. And of course, at the same time, people saw their traffic increase because when one site goes down in rankings another site moves up to take its spot.

Can you guess what happened to my traffic?

Well, based on the title of the post you are probably going

to guess that it went up.

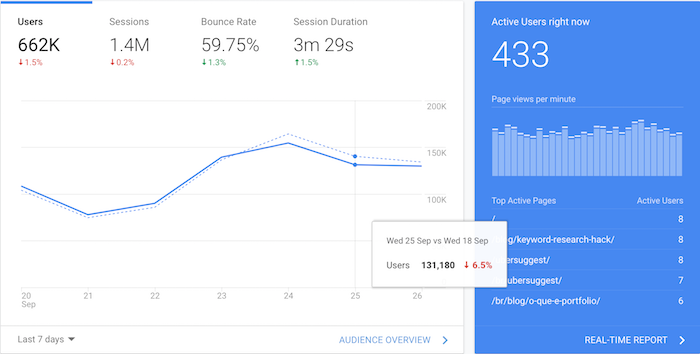

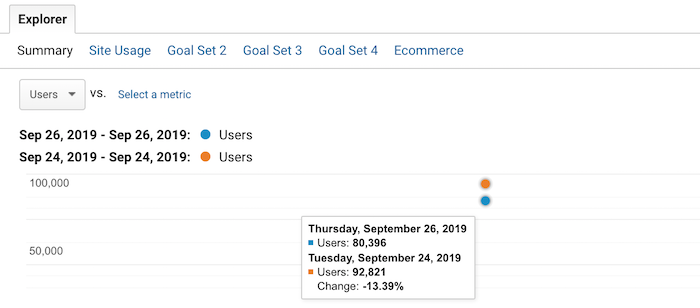

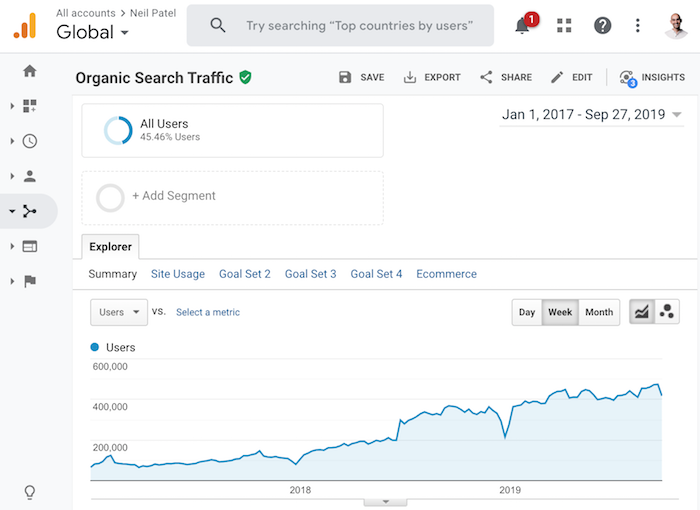

Now, let’s see what happened to my search traffic.

My overall traffic has already dipped by roughly 6%. When you look at my organic traffic, you can see that it has dropped by 13.39%.

I know what you are thinking… how did you beat Google’s core update when your traffic went down?

What if I told you that I saw this coming and I came up with a solution and contingency strategy in case my organic search traffic would ever drop?

But before I go into that, let me first break down how it all started and then I will get into how I beat Google’s core update.

A new trend

I’ve been doing SEO for a long time… roughly 18 years now.

When I first started, Google algorithm updates still sucked but they were much more simple. For example, you could get hit hard if you built spammy links or if your content was super thin and provided no value.

Over the years, their algorithm has gotten much more complex. Nowadays, it isn’t about if you are breaking the rules or not. Today, it is about optimizing for user experience and doing what’s best for your visitors.

But that in and of itself is never very clear. How do you know that what you are doing is better for a visitor than your competition?

Honestly, you can never be 100% sure. The only one who actually knows is Google. And it is based on whoever it is they decide to work on coding or adjusting their algorithm.

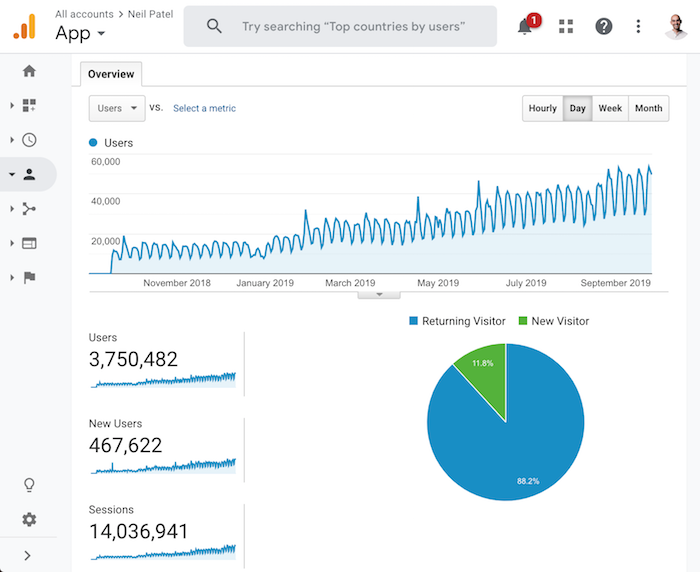

Years ago, I started to notice a new trend with my search

traffic.

Look at the graph above, do you see the trend?

And no, my traffic doesn’t just climb up and to the right. There are a lot of dips in there. But, of course, my rankings eventually started to continually climb because I figured out how to adapt to algorithm updates.

On a side note, if you aren’t sure how to adapt to the latest algorithm update, read this. It will teach you how to recover your traffic… assuming you saw a dip. Or if you need extra help, check out my ad agency.

In many cases after an algorithm update, Google continues to fine-tune and tweak the algorithm. And if you saw a dip when you shouldn’t have, you’ll eventually start recovering.

But even then, there was one big issue. Compared to all of the previous years, I started to feel like I didn’t have control as an SEO anymore back in 2017. I could no longer guarantee my success, even if I did everything correctly.

Now, I am not trying to blame Google… they didn’t do anything wrong. Overall, their algorithm is great and relevant. If it wasn’t, I wouldn’t be using them.

And just like you and me, Google isn’t perfect. They continually adjust and aim to improve. That’s why they do over 3,200 algorithm updates in a year.

But still, even though I love Google, I didn’t like the

feeling of being helpless. Because I knew if my traffic took a drastic dip, I

would lose a ton of money.

I need that traffic, not only to drive new revenue but, more importantly, to pay my team members. The concept of not being able to pay my team on any given month is scary, especially when your business is bootstrapped.

So what did I do?

I took matters into my own hands

Although I love SEO, and I think I’m pretty decent at it

based on my traffic and my track record, I knew I had to come up with another

solution that could provide me with sustainable traffic that could still

generate leads for my business.

In addition to that, I wanted to find something that wasn’t “paid,” as I was bootstrapping. Just like how SEO was starting to have more ups and downs compared to what I’ve seen in my 18-year career, I knew the cost at paid ads would continually rise.

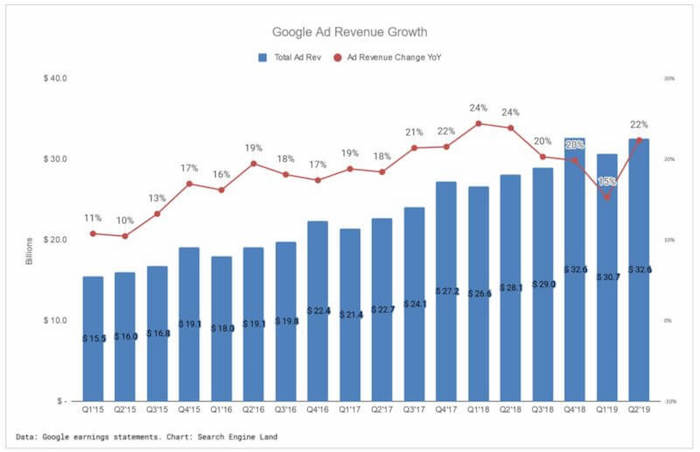

Just look at Google’s ad revenue. They have some ups and downs every quarter but the overall trend is up and to the right.

In other words, advertising will continually get more expensive over time.

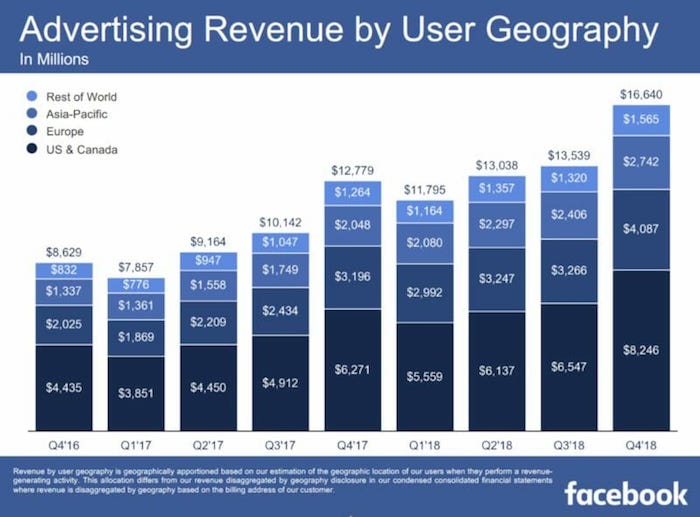

And it’s not just Google either. Facebook Ads keep getting more expensive as well.

I didn’t want to rely on a channel that would cost me more next year and the year after because it could get so expensive that I may not be able to profitably leverage it in the future.

So, what did I do?

I went on a hunt to figure out a way to get direct, referral, and organic traffic that didn’t rely on any algorithm updates. (I will explain what I mean by organic traffic in a bit.)

I went on my mission

With the help of my buddy, Andrew Dumont, I went searching for websites that continually received good traffic even after algorithm updates.

Here were the criteria that we were looking for:

- Sites that weren’t reliant on Google traffic

- Sites that didn’t need to continually produce

more content to get more traffic - Sites that weren’t popular due to social media traffic

(we both saw social traffic dying) - Sites that didn’t leverage paid ads in the past

or present - Sites that didn’t leverage marketing

In essence, we were looking for sites that were popular because people naturally liked them. Our intentions at first weren’t to necessarily buy any of these sites. Instead, we were trying to figure out how to naturally become popular so we could replicate it.

Do you know what we figured out?

I’ll give you a hint.

Think of it this way: Google doesn’t get the majority of their traffic from SEO. And Facebook doesn’t get their traffic because they rank everywhere on Google or that people share Facebook.com on the social web.

Do you know how they are naturally popular?

It comes down to building a good product.

That was my aha! moment. Why continually crank out thousands of pieces of content, which isn’t scalable and is a pain as you eventually have to update your old content, when I could just build a product?

That’s when Andrew and I stumbled

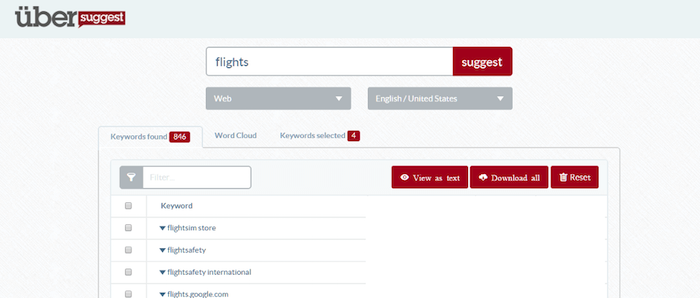

upon Ubersuggest.

Now the Ubersuggest you see today

isn’t what it looked like in February 2017 when I bought

it.

It used to be a simple tool that

just showed you Google Suggest results based on any query.

Before I took it over, it was generating 117,425 unique

visitors per month and had 38,700 backlinks from 8,490 referring domains.

All of this was natural. The original founder didn’t do any

marketing. He just built a product and it naturally spread.

The tool did, however, have roughly 43% of its traffic coming from organic search. Now, can you guess what keyword it was?

The term was “Ubersuggest”.

In other words, its organic traffic mainly came from its own brand, which isn’t really reliant on SEO or affected by Google algorithm updates. That’s also what I meant when I talked about organic traffic that wasn’t reliant on Google.

Now since then I’ve gone a bit crazy with Ubersuggest and released loads of new features… from daily rank tracking to a domain analysis and site audit report to a content ideas report and backlinks report.

In other words, I’ve been making it a robust SEO tool that has everything you need and is easy to use.

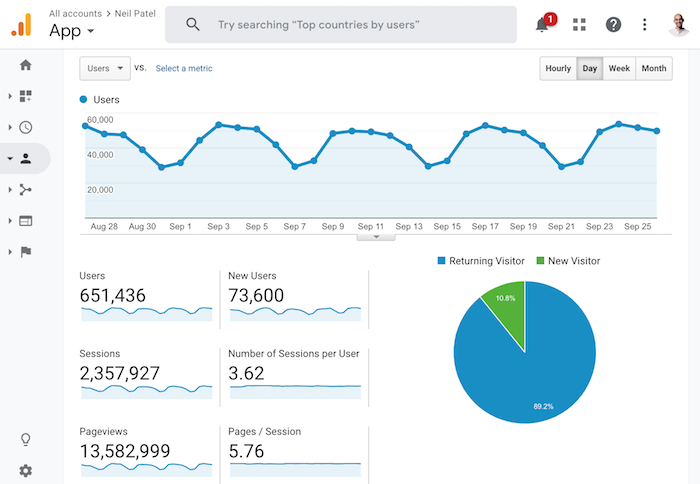

It’s been so effective that the traffic on Ubersuggest went from 117,425 unique visitors to a whopping 651,436 unique visitors that generates 2,357,927 visits and 13,582,999 pageviews per month.

Best of all, the users are sticky, meaning the average Ubersuggest user spends over 26 minutes on the application each month. This means that they are engaged and will likely to convert into customers.

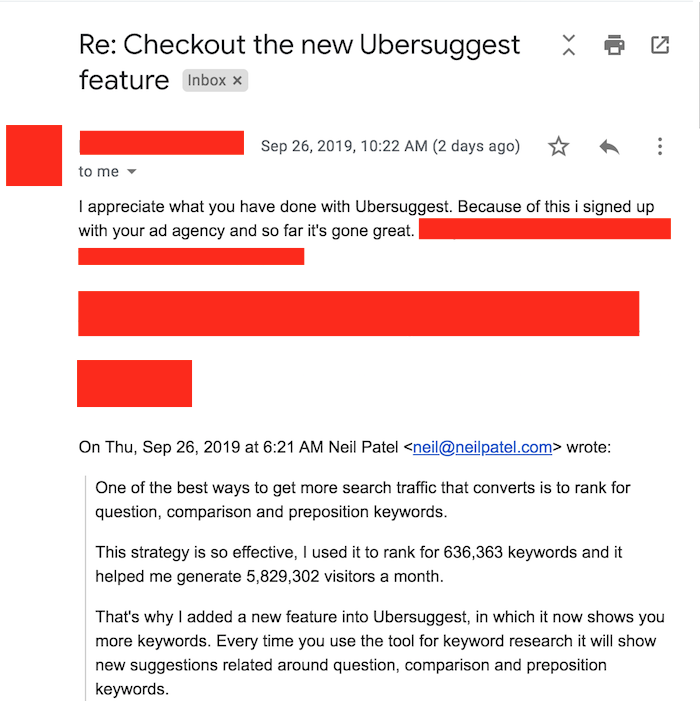

As I get more aggressive with my Ubersuggest funnel and start collecting leads from it, I expect to receive many more emails like that.

And over the years, I expect the traffic to continually grow.

Best of all, do you know what happens to the traffic on Ubersuggest when my site gets hit by a Google algorithm update or when my content stops going viral on Facebook?

It continually goes up and to the right.

Now, unless you dump a ton of money and time into replicating

what I am doing with Ubersuggest, but for your industry, you won’t generate the

results I am generating.

As my mom says, I’m kind of crazy…

But that doesn’t mean you can’t do well on a budget.

Back in 2013, I did a test where I released a tool on my old blog Quick Sprout. It was an SEO tool that wasn’t too great and honestly, I probably spent too much money on it.

Here were the stats for the first 4 days of releasing the

tool:

- Day #1: 8,462 people ran 10,766 URLs

- Day #2: 5,685 people ran 7,241 URLs

- Day #3: 1,758 people ran 2,264 URLs

- Day #4: 1,842 people ran 2,291 URLs

Even after the launch traffic died down, still 1,000+ people per day used the tool. And, over time, it actually went up to over 2,000.

It was at that point in my career, I realized that people

love tools.

I know what you are thinking though… how do you do this on a budget, right?

How to build tools without hiring developers or spending

lots of money

What’s silly is, and I wish I knew this before I built my first tool on Quick Sprout back in the day, there are tools that already exist for every industry.

You don’t have to create something new or hire some expensive developers. You can just use an existing tool on the market.

And if you want to go crazy like me, you can start adding multiple tools to your site… just like how I have an A/B testing calculator.

So how do you add tools without breaking the bank?

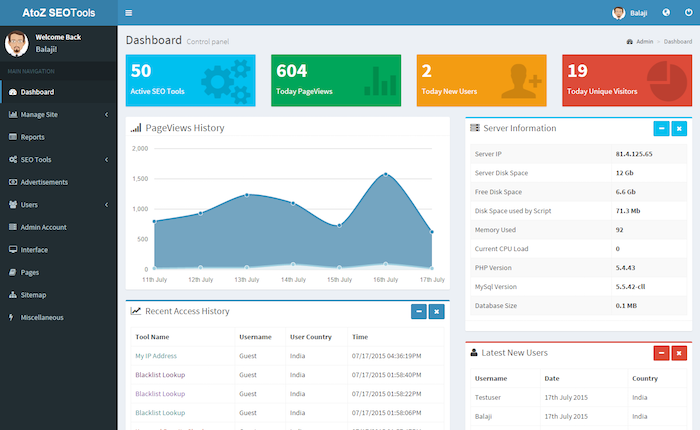

You buy them from sites like Code Canyon. From $2 to $50, you can find tools on just about anything. For example, if I wanted an SEO tool, Code Canyon has a ton to choose from. Just look at this one.

Not a bad looking tool that you can have on your website for just $40. You don’t have to pay monthly fees and you don’t need a developer… it’s easy to install and it doesn’t cost much in the grand scheme of things.

And here is the crazy thing: The $40 SEO tool has more features than the Quick Sprout one I built, has a better overall design, and it is .1% the cost.

Only if I knew that before I built it years ago. :/

Look, there are tools out there for every industry. From mortgage calculators to calorie counters to a parking spot finder and even video games that you can add to your site and make your own.

In other words, you don’t have to build something from scratch. There are tools for every industry that already exists and you can buy them for pennies on the dollar.

Conclusion

I love SEO and always will. Heck, even though many SEOs hate

how Google does algorithm updates, that doesn’t bother me either… I love Google

and they have built a great product.

But if you want to continually do well, you can’t rely on one marketing channel. You need to take an omnichannel approach and leverage as many as possible.

That way, when one goes down, you are still generating traffic.

Now if you want to do really well, think about most of the

large companies out there. You don’t build a billion-dollar business from SEO,

paid ads, or any other form of marketing. You first need to build an amazing

product or service.

So, consider adding tools to your site, the data shows it is more effective than content marketing and it is more scalable.

Sure you probably won’t achieve the results I achieved with Ubersuggest, but you can achieve the results I had with Quick Sprout. And you can achieve better results than what you are currently getting from content marketing.

What do you think? Are you going to add tools to your site?

PS: If you aren’t sure what type of tool you should add to your site, leave a comment and I will see if I can give you any ideas. 🙂

The post How I Beat Google’s Core Update by Changing the Game appeared first on Neil Patel.